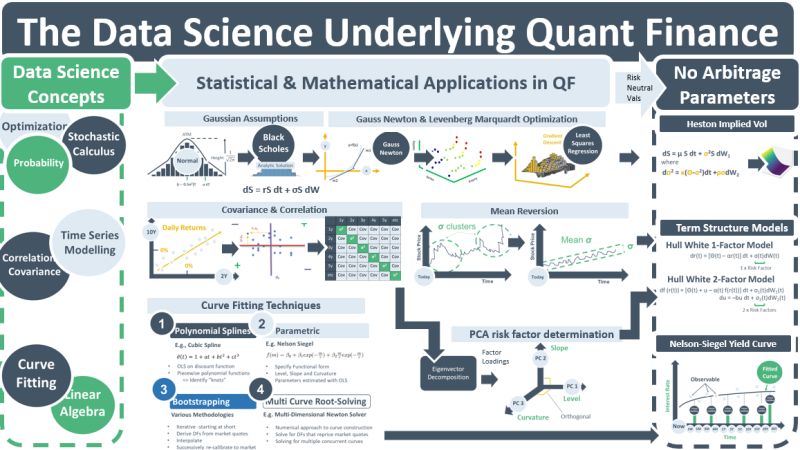

When financial markets first emerged, the data they created became the basis for QF. Prices from different markets exhibited patterns for growth, correlation and mean-reversion that could be analysed statistically. When Ito invented stochastic calculus in the 1950s, solutions were found for the non-linear relationships between option prices and their underlyings. Later, Gauss-Newton and Levenberg-Marquardt optimization approaches were used to derive vols from option prices and calibrate parameters for models that could be used to simulate price distributions. As the concept of the yield curve became more important, curve fitting techniques were developed to fit smooth curves to data that was dependent on market liquidity.

The creation of the internet in the 1980s was the catalyst for the vast amounts of data available today for analysis of on-line human behaviour. The ML/AI paradigm emerged to discern patterns and relationships in the data. Recommender systems, NLPs and Gen AI are some of the applications that came from it.

The data generated by financial markets and the data generated by social-media have one thing in common: they are generated by human activity. The data is therefore random in nature requiring probabilistic methods to analyse it. Under the ML/AI approach, models were trained on data sets to discern patterns & relationships. Data science was born and due to similarities of data sets it was able to use methods made popular in QF. E.g., gradient descent approaches used to calibrate Hull-White parameters are also used in AI to optimize weights in NNs to minimize loss fns. And polynomial curve fitting approaches such as those used in Nelson-Siegel methods are being used in NLP and facial recognition routines.

In QF, reading large amounts of time-series data to determine parameters such as growth, vol, correlation & mean-reversion is computationally expensive. In 1973, Black, Scholes & Merton developed a theory using arbitrage arguments that enabled these parameters to be derived from today’s quoted prices – no longer was it required to read large volumes of time-series data. The theory was risk-neutral valuations (RNV). It became the basis for the no-arbitrage models used for derivative pricing today. The removal of the need to mine history enabled massive growth in the derivative industry.

In ML/AI, a similar story unfolded. True data-generating processes were computationally expensive. Techniques were developed that allowed algorithms avoid the need to mine complete data sets. Empirical risk-minimization, fn approximation, and generative-discriminative models are examples. While these techniques are not based on finance theories – they are advances in data science – they had a similar impact on data volumes as RNV did in QF. They led to data-processing short-cuts and the high-speed responses that are fuelling the popularity of AI models.