This week in my series on how GoldenSource partners with data vendors, I have a quick follow-up to last week’s post on our unified data model and how it’s being used to generate more comprehensive and actionable insights for the buy side and the sell side.

The ultimate goal in any exploration of multiple sources of data is to arrive at a single source of truth, but it’s it still important to retain all the individual contributing views. There are two critical reasons why.

First, full auditability is essential. Even the most accurate and refined Gold Copy can lose credibility if its contents cannot be traced back to the original sources. Stakeholders must be able to understand exactly how and why a particular value was chosen—whether for a security, an entity, a corporate action, and of course for a price —and what data contributed to that decision. Without this transparency, trust in the data diminishes.

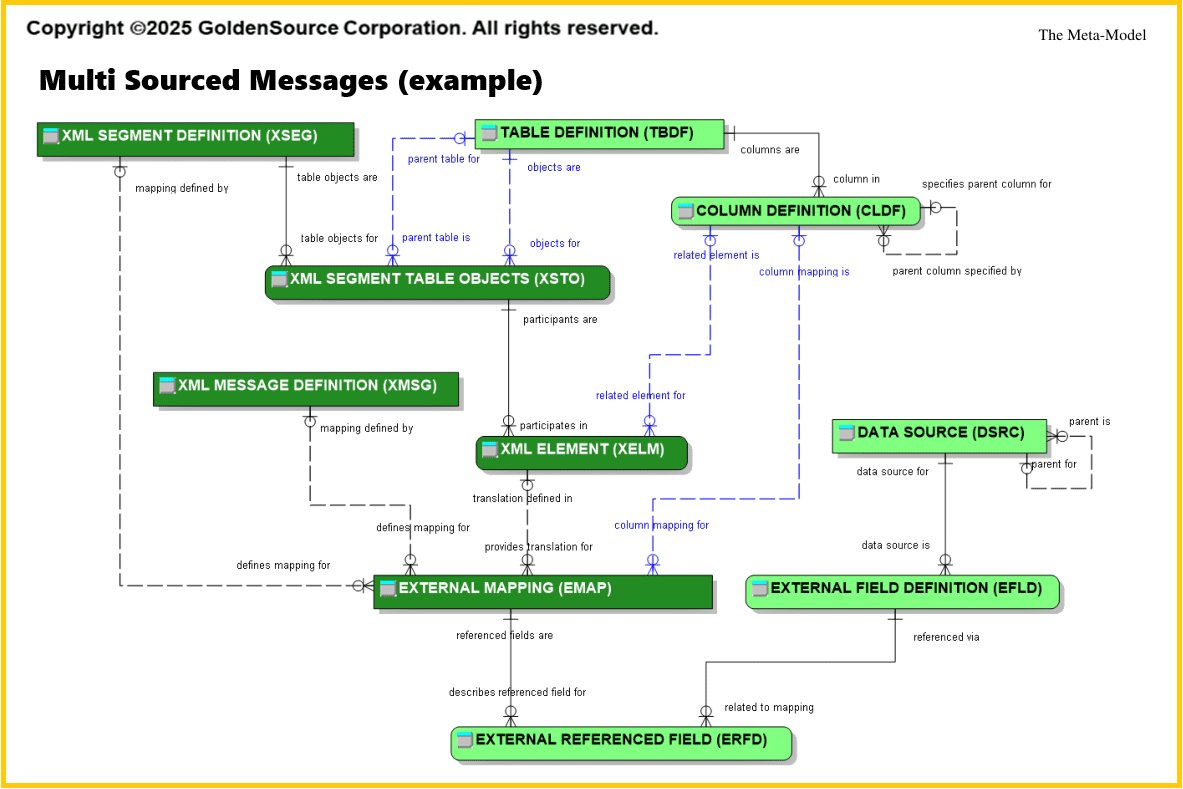

In practice, that means your data model has to be able to memorize and represent, the external or internal data source that has contributed a certain data set to the Gold Copy, and, if needed, to the granularity of the individual data point. As a result, any subsequent processing can rely on that information for lineage purposes, in addition to research differences and agreements between the data providers’ views for the data in question.

Second, many consuming systems still depend on the data as delivered by specific sources. This reliance may be rooted in historical practices or integration dependencies, but it remains a reality for many organizations. A successful data management system must be flexible enough to support both the harmonized Gold Copy and the ability to deliver data in the form expected by downstream systems.

Again, for all practical purposes that means the data model is capable of recording the raw (or semi-raw) data alongside their harmonized and standardized version, as well as making it available by itself or in conjunction with the consumers’ preferences. As you will have guessed, this common challenge can be solved by a data model and its accompanying infrastructure in more or less elegant and efficient ways.

Next week, I’ll cover how having a Proactive Change Management process helps anticipate and adapt to new regulations, emerging asset classes, and shifting data types.