This is the second installment in my series on how GoldenSource collaborates with data vendors worldwide. In this edition, I want to explore the features of a flexible data platform and how it seamlessly integrates diverse data sources to meet the unique needs of our clients.

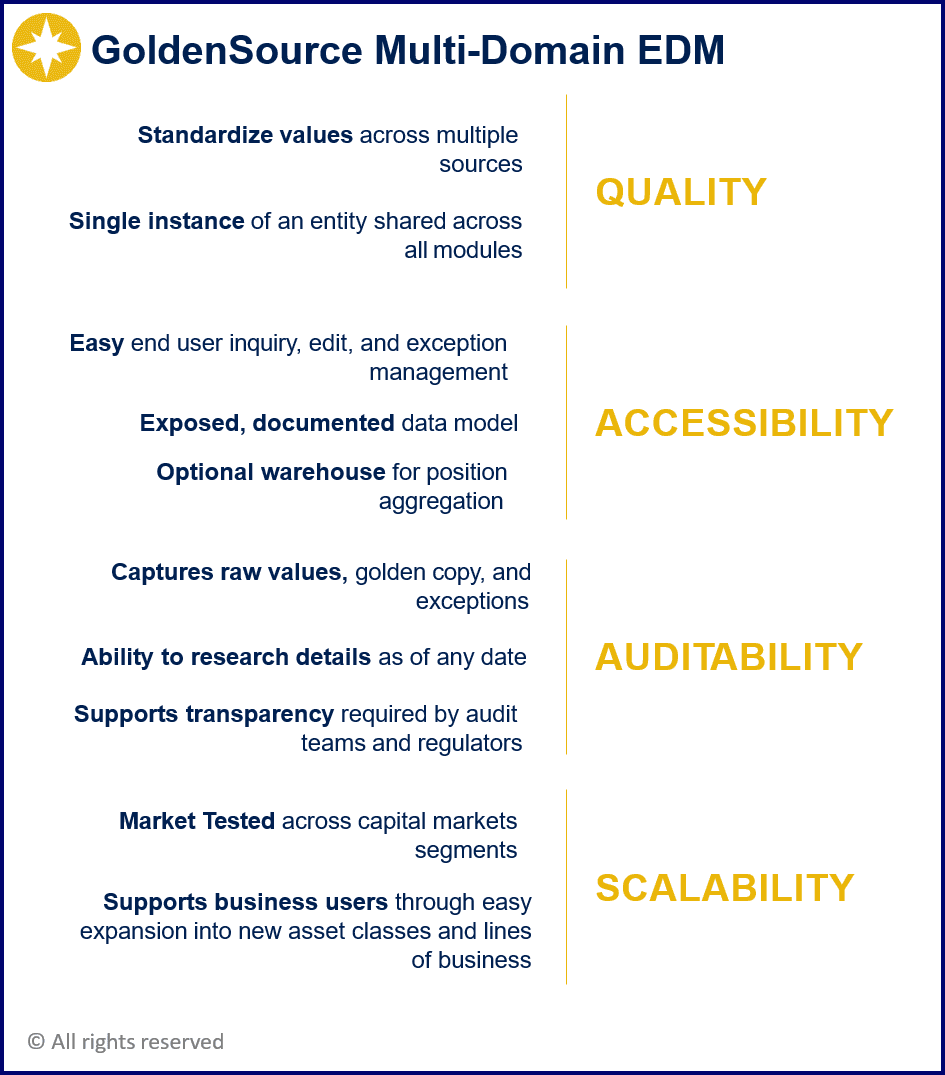

A multi-sourced data platform must have specific capabilities to be truly effective. At its core, it should identify and record each data source, allowing users to prioritize sources at varying levels of granularity—ranging from broad categories like asset classes or data types to detailed preferences by region, country of domicile, or even individual data fields.

Beyond ranking preferences, the platform should also allow adjustments to the data, including those from the most preferred source. This capability helps ensure that the data remains adaptable to evolving requirements.

For end-users and analysts, transparency is paramount. The platform should enable a side-by-side view of all subscribed sources, providing insights into the specific values each vendor has supplied. While the main master view consolidates data into a ‘Gold Copy,’ the ability to compare individual source inputs is essential for making informed decisions about preferences, adjustments, and data investments.

Effective governance is another cornerstone of a successful platform. Features like four- or six-eyes approval processes (or maker-checker mechanisms) ensure robust oversight for manual data maintenance and user permissions, particularly when integrating data from multiple internal and external sources.

Smart, conditional load scheduling is essential for any Data Management System that integrates multiple data sources. These systems must determine when to load specific content and whether one data feed needs to be processed before it can be augmented by another. Additionally, they must remain operational even if a data file doesn’t arrive on time or in the expected format—an occurrence we all know is inevitable in a 24/7 schedule.

Finally, the foundation of any multi-sourced data solution lies in its underlying data model. A strong model enables the recording, standardization, and consolidation of content from diverse sources. It also supports complex matching and merging functionalities, which go well beyond simple identifier matching to create a cohesive, reliable dataset.

In my next installment on the importance of a flexible data platform, I’ll take a look at the five critical aspects of an advanced Extract, Transform, and Load (ETL) process.